Let’s be honest, Artificial Intelligence is everywhere right now. It feels like every company is talking about it, and if you’re a product manager, you’re probably thinking about what this all means for your job. The good news is, this is the biggest opportunity to come to our field in years. Companies desperately need people who can connect this powerful technology to real customer problems. That person is the AI Product Manager, and it’s the most exciting role in tech today.

But learning about AI can feel overwhelming, with all the technical jargon and hype. That’s why we created this Free AI Product Management course. Our goal is simple: to teach you the practical side of AI product management in plain English. We’ll skip the complicated theory and focus on what you’ll actually do at work. You’ll learn how to spot good opportunities for AI, how to work with your engineering team on AI projects, and how to design products that are genuinely helpful and easy to use. If you want a clear, practical way to bring AI into product management, this course is for you.

About The Course

Ready to step into the world of AI Product Management? We created this Free AI PM Course to be your perfect starting point. We’ll walk you through everything you need to know, from the basics of how Generative AI works to the way companies are building new products with it. You’ll get a clear, behind-the-scenes look at how technologies like LLMs (the brains behind tools like ChatGPT) actually function and learn the step-by-step lifecycle of an AI product. We break down the tricky tech topics like RAG and Prompt Engineering into simple terms, so you can feel confident working with engineering teams.

Our goal is simple: to give you the real-world skills you need to build a career in AI. This course is packed with practical examples from actual companies, showing you exactly how AI is used today. We’ll teach you how to spot good AI opportunities, build a project for your own portfolio, and even get you ready for AI PM interviews. By the end, you won’t just understand AI, you’ll have the confidence and the knowledge to build amazing products and take the next big step in your career. All the videos and materials are completely free when you finish. Come join us and start your journey!

How AI Will Upgrade You as a Product Manager?

- Faster discovery: Summarize interviews, cluster themes, and surface opportunities 10× quicker.

- Sharper specs: Turn messy context into structured PRDs, acceptance criteria, and edge cases.

- Smarter decisions: Use retrieval + lightweight models to validate ideas with real data, not vibes.

- Operational leverage: Automate repetitive workflows (support triage, content QA, routing) to free teams for higher-value work.

- Better economics: Learn to model cost per request, control latency, and choose the right model (small + RAG often beats huge).

- Career durability: Build a portfolio that proves you can ship safe, reliable AI features by not just talking about them.

Who Should Take This Course?

This course is ideal for:

- Anyone who wants to become an AI Product Manager: Build your knowledge from the ground up and learn the specialized skills needed to enter this exciting field.

- Aspiring Product Managers: Gain a competitive edge by mastering the principles of AI, setting yourself apart in the job market.

- Product Managers who want to leverage AI: Transition your existing PM skills into the AI space and learn how to integrate cutting-edge technology into your product strategy.

This course covers:

- Core AI use cases for businesses with software or digital experiences.

- Best practices for building AI-powered features and functionality.

- How AI can transform and elevate the product-led organization.

- Applying AI tools and use cases to accelerate product value and business growth.

Session 1: Introduction to Generative AI & the LLM Economy

Get started with the foundations of AI Product Management. Learn what Generative AI is, how Large Language Models (LLMs) actually work, and explore the different types of AI Product Managers shaping today’s industry.

- What is Generative AI and why it matters for Product Managers

- The rise of the LLM economy: ChatGPT, Gemini, Claude, and more

- Types of AI Product Managers (Tech PM, Data PM, Business PM, etc.)

- How Large Language Models (LLMs) actually work (tokens, training, inference)

Slides 👇🏽

Key Takeaways:

- Mindset Over Tactics: AI technologies like RAG and fine-tuning are tactics that will evolve. The timeless mindset of focusing on user problems, business value, and deep curiosity is what creates long-term success.

- Problem First, AI Second: AI is a powerful solution, but it’s still just a solution. Great product management starts by deeply understanding and validating a user or business problem, not by looking for a problem to fit a cool technology.

- The GenAI Value Stack: The AI ecosystem is built on four layers: Infrastructure (e.g., Nvidia), Models (e.g., OpenAI), Applications (e.g., Gamma), and Services (e.g., AI Agencies). Most PMs will find massive opportunities in the Application layer.

- LLMs Predict the Next Word: At its core, an LLM is a sophisticated mathematical model trained on vast amounts of data to become exceptionally good at predicting the next most probable word (or “token”) in a sequence.

- Training Makes Perfect: LLMs learn through pre-training on the entire internet to build a base model, followed by post-training with high-quality human feedback to become helpful, harmless, and aligned with human values.

- The Four Lenses of Product: Every successful product, AI or not, must be Valuable (users want it), Viable (the business can support it), Usable (users can figure out how to use it), and Feasible (engineers can build it).

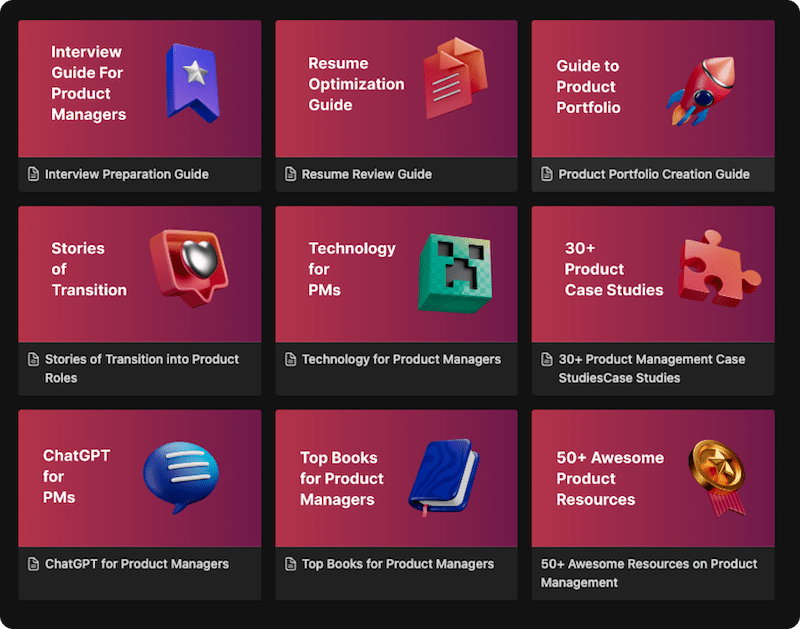

Predictive vs. Generative AI: Understanding the Two Faces of AI

The instructor categorizes AI solutions into two broad types that dominated different eras.

Predictive AI (Traditional AI): This was the most common form of AI before 2022. It is trained on historical data to make predictions or classifications about new data. It excels at supporting decisions by identifying patterns.

Use Cases:

- Pattern Detection: Identifying credit card fraud by spotting anomalous transaction patterns.

- Classification: Labeling an email as “spam” or “not spam.”

- Forecasting: Predicting next month’s sales or tomorrow’s weather based on past data.

- Recommendations: Suggesting what movie to watch on Netflix or what product to buy on Amazon based on your viewing/purchase history.

Generative AI (GenAI): This newer form of AI, popularized by models like GPT, focuses on creating new content. Its capabilities can be summarized by the acronym UTG:

- Understand: It can comprehend context and nuance in text, images, and other data types you provide.

- Transform: It can summarize long documents, edit images, or translate languages.

- Generate: It can create entirely new, original content that didn’t exist before, like writing an email in the style of a specific person or generating a photorealistic image from a text description.

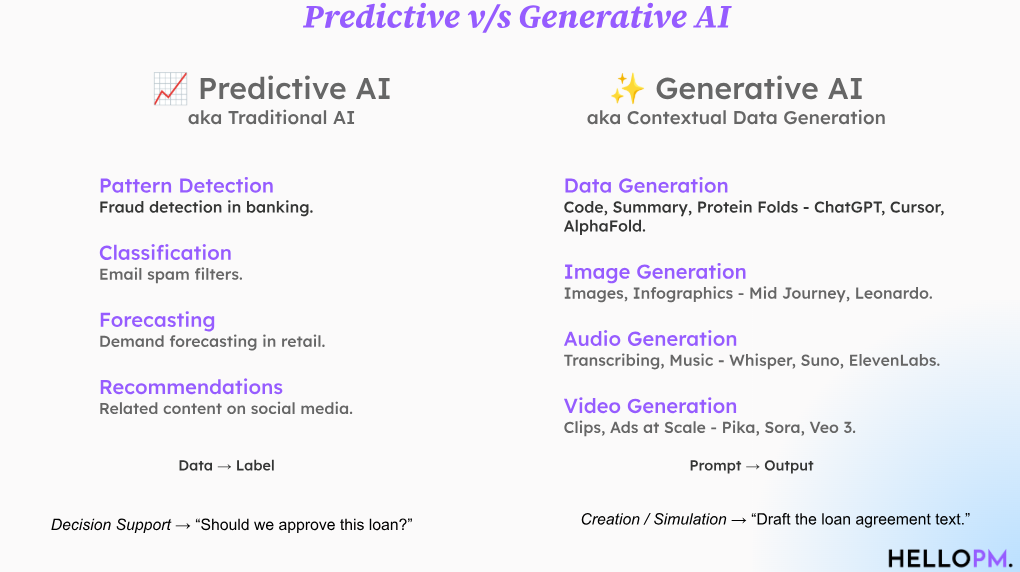

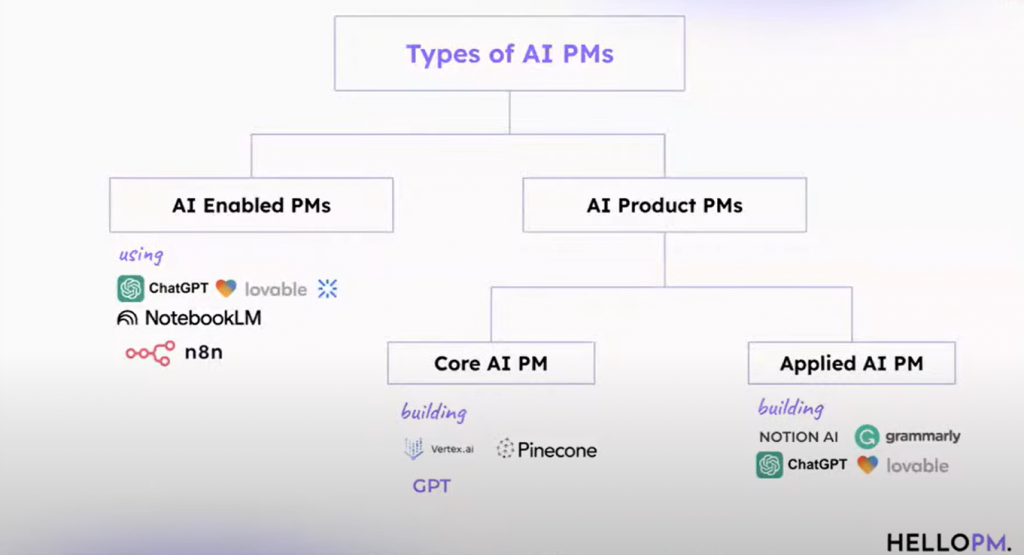

What Kind of AI PM Are You? Core vs. Applied Roles

Based on the value stack, the instructor defines three types of AI Product Managers:

- AI-Enabled PM: This describes virtually every modern PM. They use AI tools like ChatGPT or Claude to enhance their productivity, drafting documents, summarizing research, or generating ideas.

- Applied AI PM: These PMs work in the application layer. They build products or features where AI is a core part of the user experience. A PM working on Notion AI or Grammarly is an Applied AI PM. They integrate models from the layer below to solve specific user problems.

- Core AI PM: These PMs work deep in the model and infrastructure layers. They are responsible for building the foundational models (like GPT-5) or the hardware and platforms they run on. This is a highly technical role requiring a deep understanding of machine learning and data science.

Building Great AI Products: The Four Critical Lenses

Finally, the instructor brings the discussion back to product management, reminding everyone that technology is only one piece of the puzzle. Citing legendary product leader Marty Cagan, he introduces four lenses through which every product idea must be evaluated:

- Valuable: Do users actually want or need this? Does it solve a real problem for them?

- Viable: Does this make sense for our business? Can we make money from it, or does it support our strategic goals?

- Usable: Can users easily figure out how to use it? Is the experience intuitive and well-designed?

- Feasible: Do we have the technical skills, resources, and technology to build this?

The instructor warns that many people entering AI product management focus obsessively on feasibility (the technology). However, a PM’s primary job is to first ensure a product is valuable, viable, and usable. An engineer can’t build a great product if the PM hasn’t figured out what is worth building in the first place.

Product discovery fundamentals (problem first, always)

PMs exist to do two things: deliver business outcomes (revenue, retention, users) by solving user/stakeholder problems. Ideas flow from:

- Business strategy/goals (e.g., revenue growth, retention)

- User research (interviews, behavior)

- Market/ecosystem/competition (e.g., regulation changes)

- Data (product analytics, secondary research)

- Stakeholders (sales, marketing, design, engineering, data science)

- Your domain insight (e.g., you’re a member of the audience you serve)

Collect many problems, validate ruthlessly, and only then explore solutions. AI is one solution path, use it where it beats alternatives for that problem.

Example: If churn stems from poor onboarding, a rule-based checklist might outperform a fancy LLM assistant. Conversely, if support tickets are repetitive and language-heavy, a retrieval-augmented LLM bot could be the better tool.

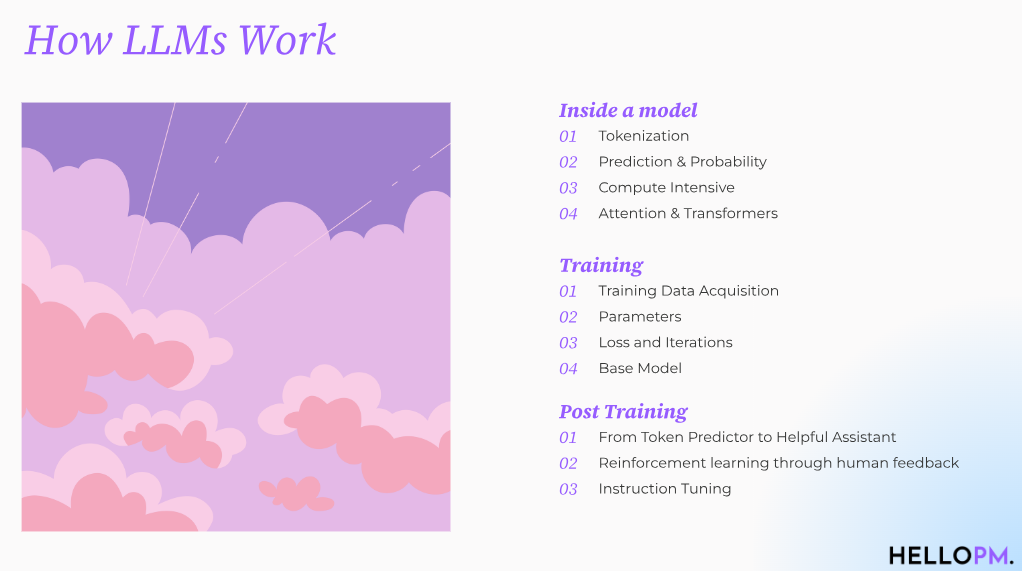

How do LLMs work?

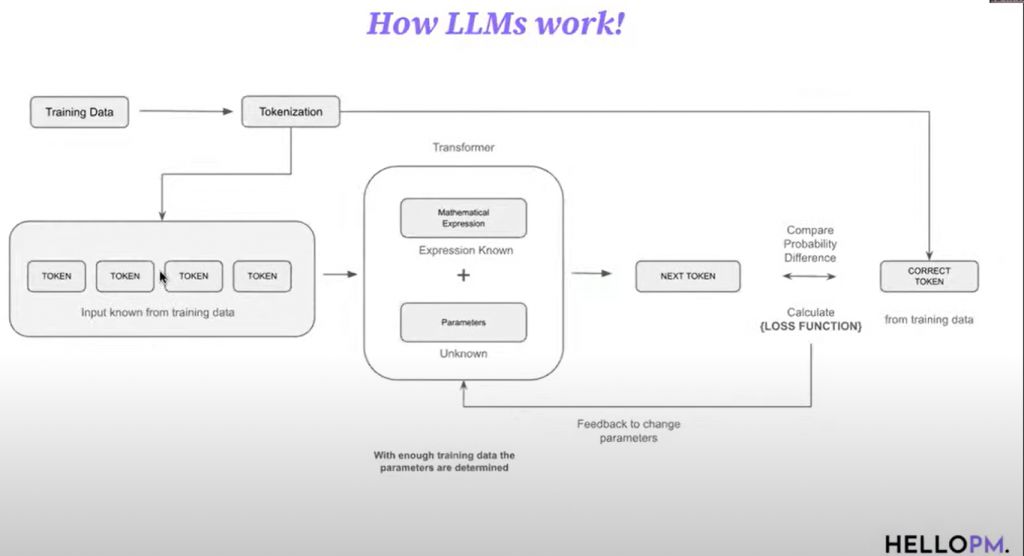

At heart, an LLM is a huge mathematical function with billions of parameters that learns to predict the next token (sub-word unit) given prior tokens.

- Tokenization: text → tokens (numbers).

- Computers operate on numbers; tokenization maps “Today is the first day of AI Sprint” into a short sequence of integers.

- Computers operate on numbers; tokenization maps “Today is the first day of AI Sprint” into a short sequence of integers.

- Pre-training (next-token prediction at scale):

- Feed internet-scale text (and increasingly multimodal data).

- For each training example, hide the next token; the model predicts it.

- Compare prediction vs. truth with a loss function; adjust parameters; repeat trillions of times.

- Intuition via algebra: given a formula like y = mx + c, enough (x,y) pairs let you solve for m and c. LLMs do this at mind-boggling scale for billions of parameters.

- Feed internet-scale text (and increasingly multimodal data).

- Inference: at runtime, the model samples the next token from a probability distribution.

- Temperature controls randomness (lower = more deterministic, higher = more creative).

- Temperature controls randomness (lower = more deterministic, higher = more creative).

Concrete mini-example: “Virat Kohli plays with ___.” Early in training the model may wrongly assign high probability to nonsense tokens. The loss nudges parameters so “bat” becomes the most probable continuation over time.

From base model to helpful assistant (post-training & RLHF)

A freshly pre-trained base model is a talented but unpolished kid: it can mimic patterns but isn’t aligned with human expectations. Two big steps make it helpful:

- Post-training (instruction tuning): curated, higher-quality data teaches it how to follow instructions and comply with policy. Example: feed lots of Q→A pairs that say “I don’t know” when appropriate to reduce hallucinations.

- RLHF (Reinforcement Learning with Human Feedback): show the model multiple candidate answers; humans rank them; the model learns to prefer better behaviors (helpful, honest, harmless). Result: more civil, on-policy, and useful outputs.

Quick reference examples mentioned by the instructor

- Predictive AI: fraud detection in banking; spam classification; sales/weather forecasting; content recommendations.

- Generative AI tools/uses: code & doc generation; presentation tools like Gamma; site/app builders like Lovable; transcription (e.g., Whisper-style); image tools (Midjourney-style); video ad generation.

- Model ecosystem: OpenAI (GPT), Anthropic (Claude), Google (Gemini), Meta (Llama), plus others (e.g., Mistral, Grok).

- Infra: NVIDIA GPUs; managed ML stacks like Vertex AI, SageMaker.

Session 2: AI Product Lifecycle & Building Blocks

Discover how AI products are conceived and scaled. We’ll cover the AI Product Lifecycle, mapping real-world Gen AI use cases, and the building blocks you need to bring AI products to life. Plus, a walkthrough of Granola and ChatPRD in action.

- The AI Product Lifecycle: from idea → prototype → deployment

- Mapping Gen AI use cases across industries

- Core building blocks of AI products (data, models, infra, APIs)

- How Granola and ChatPRD demonstrate AI-driven product workflows

Slides 👇🏽

Key takeaways:

- The PM’s Core Job: A Product Manager’s purpose is always to solve user problems and achieve company outcomes. This fundamental rule does not change with AI.

- The Four Risks Framework is Essential: Every product, especially an AI product, must be evaluated against four critical risks: Is it Valuable (does someone need it?), Usable (can they use it?), Feasible (can we build it?), and Viable (does it make business sense?).

- Feasibility in AI is about Integration: Building an application-layer AI product is often about intelligently combining existing technologies and APIs (e.g., LLMs for summarization, Whisper for transcription, calendar APIs for data) to solve a user problem.

- Viability Hinges on Cost of Inference: The business viability of many AI products depends heavily on the cost of API calls to LLMs. Product Managers must be ableto perform guesstimates of token usage (both input and output) to understand the cost per user and set appropriate pricing.

- Inspiration is Perishable, Action is Key: Motivation to learn about AI fades quickly. The only way to convert that inspiration into knowledge is through immediate, practical application, such as dissecting existing products and building your own projects.

Marty Cagan’s four risks (and why value comes first)

- Valuable: Does it solve a real problem or desire, for a well-defined audience?

- B2C examples: Instagram addresses boredom/validation.

- B2B examples: Slack fixes synchronous collaboration friction.

- Internal tools matter too (e.g., support dashboards to reduce “Where’s my order?” tickets).

Define the “who” as crisply as the “what” product-market fit is “right product for the right audience.”

- B2C examples: Instagram addresses boredom/validation.

- Usable: Can your audience actually use it to get value?

A pivotal story: early APIs (like GPT-2 era) were powerful but dev-only; Chat UX unlocked mass adoption. Usability isn’t only UI, API products depend on great docs and predictable behaviors. - Viable: Does it make business sense (market size, pricing, strategy, ROI)?

Estimate costs and willingness to pay; align to company goals. - Feasible: Is it practical to build now (tech, talent, time, cost)?

Don’t vanish into bottomless tech tunnels; learn enough to reason about architecture and constraints.

Failure happens but you have to treat it as a milestone, not an endpoint. Always know why something failed (value, UX, business, or tech) and iterate.

Types of AI PMs

- AI-enabled PMs: use AI to be more productive in their current role.

- AI product PMs:

- Core PMs (model/infra) with deeper technical backgrounds.

- Applied PMs (application layer) who compose capabilities into user value.

- Core PMs (model/infra) with deeper technical backgrounds.

How do large language models work?

An LLM is a next-token predictor trained on internet-scale text. Text is turned into tokens (numeric representations), fed into a transformer (a big mathematical function). The model predicts an output; we compare it with the known answer; the loss guides parameter updates. Repeat billions of times → a base model.

Base models aren’t yet “helpful.” Post-training (e.g., supervised data, preference signals, constitutions) teaches helpfulness, safety, tone, and when to say “I don’t know,” reducing hallucinations. You’ll later learn contextualization (RAG) and fine-tuning to adapt models to your use case.

Case study 1: “Granola/Granula”

What it does: Install a desktop app, sync your calendar, and it passively activates during meetings. It listens locally (no awkward bot “joining”), transcribes audio, and generates an action-oriented summary (decisions, action items, open questions, topic-wise notes, notable quotes). A meeting-specific notepad opens automatically so you can capture context alongside AI notes.

Why users care (value): Professionals with back-to-back meetings don’t want a wall of raw transcript; they want clear actionables and next steps they can trust. That’s the real job to be done.

Why it feels smooth (usability): No intrusive attendee bot, automatic meeting detection from calendar, auto-created notes. The experience mirrors how people already work.

How it likely works (feasibility):

- OS-level audio permission to capture meeting audio.

- Calendar APIs to detect meeting start and metadata.

- ASR (e.g., a speech-to-text API) for transcription.

- An LLM call with a structured prompt to produce sections like “Key decisions” and “Action items.”

Will the business make sense (viability):

The instructor models cost of inference. For a typical 30-minute call, estimate input tokens (prompt + transcript length) and output tokens (the summary). Words per minute (~120) × minutes gives rough token counts. Multiply by meetings per month for casual, regular, and power users to estimate per-user monthly tokens and cost. Choose right-sized models (cheaper for summarization; expensive only when needed). You can self-host open-source models, but you still pay infra. The point: PMs should be able to back-of-the-envelope the economics.

Case study 2: “ChatPRD” a PRD assistant

What it does: You provide context (users, goals, constraints). The system uses a prompt like “You are a seasoned PM. Produce a PRD in this format…” and returns a structured draft you can edit line-by-line. It can also critique an existing PRD.

Value: Saves hours for PMs who must follow a standard template and often start from a blank page.

Usability: Familiar chat interface, direct editing, iterative prompting.

Feasibility: Simple LLM call with a strong template and follow-up questions to close gaps.

Viability: If a PM’s time is valuable, even a few hours saved per month justify a low subscription. Costs remain manageable because token budgets for PRDs are smaller than always-on assistants.

Learning philosophy: how to actually get good

Two lines to remember:

- “Talent is the pursuit of interest.” You won’t wake up expert; keep practicing the thing you care about.

- “Learning is course correction.” Try, get feedback, adjust. Theory is the map; building and teardown are the road.

Session 3: Context Engineering, RAG & Case Studies

- What is Context Engineering and why it’s critical for AI PMs

- Retrieval-Augmented Generation (RAG) explained with examples

- Prompt engineering best practices for PMs

- Fine-tuning models: when and how to apply it

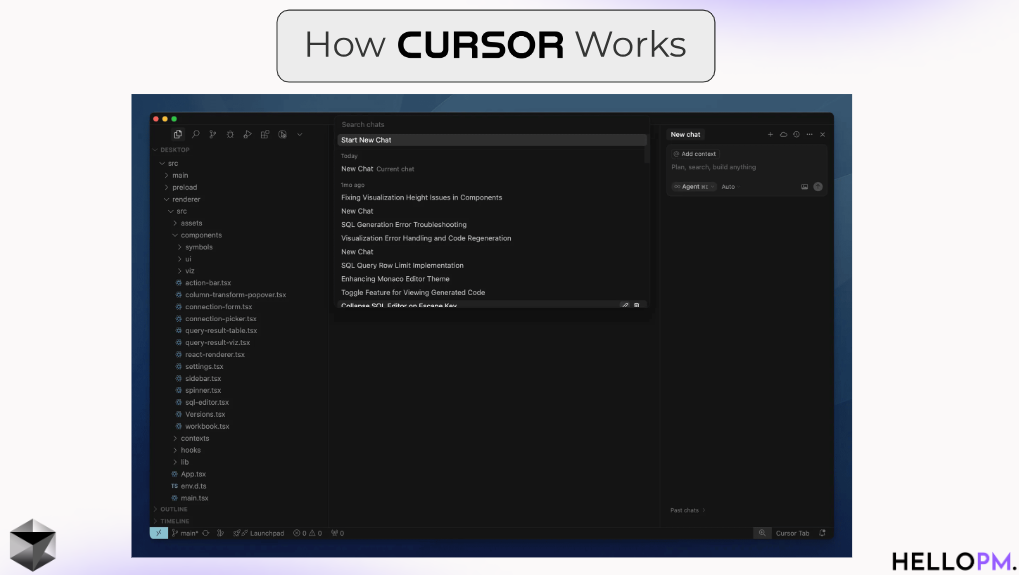

- Case Study 1: Cursor – building an AI-first developer experience

- Case Study 2: Lovable – AI for design and productivity

Slides 👇🏽

📚 Resources:

Research on “How growing context reduces the model output quality“

Cornell research paper on Prompt Engineering.

Key takeaways:

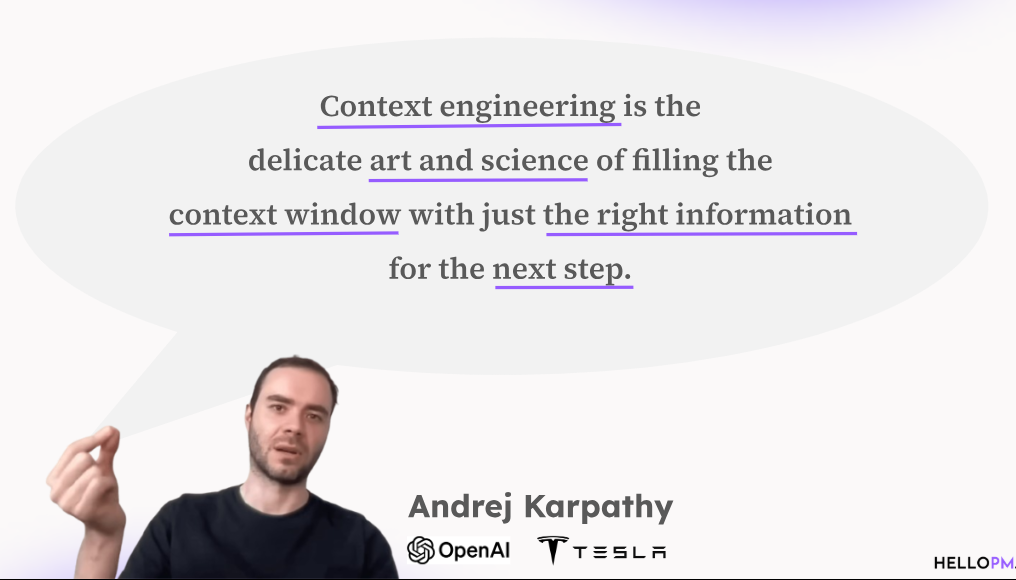

- Context engineering = the art and science of filling the model’s context window with just the right info for the next step.

- Long prompts aren’t better: too much context degrades quality; pick and compress only what’s relevant.

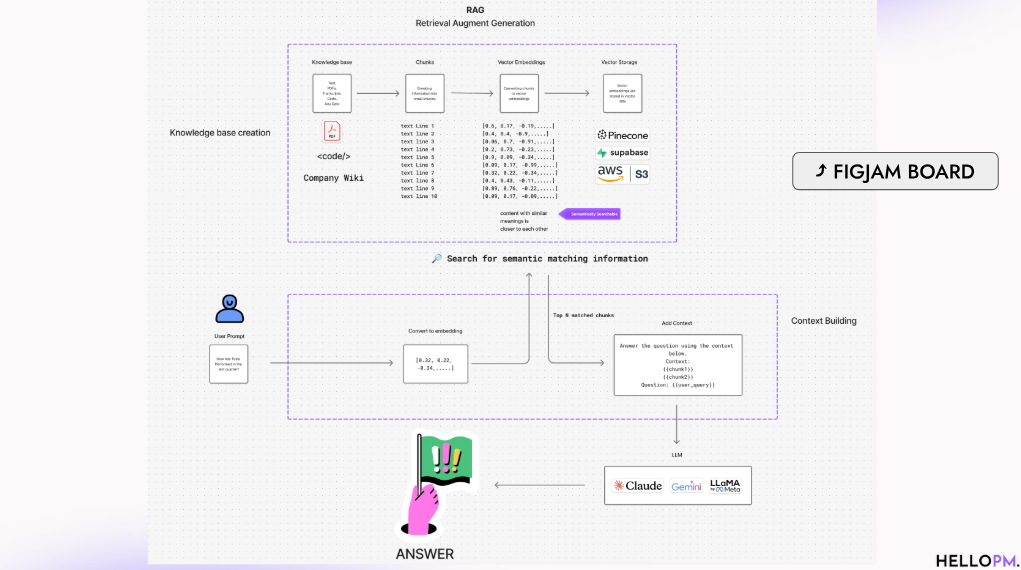

- RAG workflow: ingest → chunk → embed → store (vector DB) → retrieve top-N → compose prompt → answer.

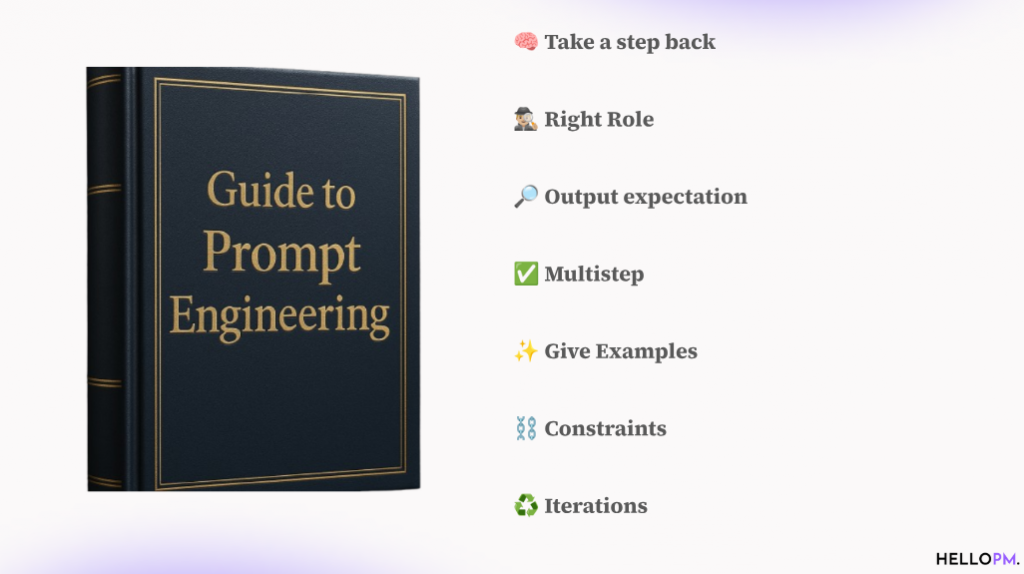

- Prompt engineering still matters (roles, examples, constraints, multi-step), and you can systematize it with text expanders and a prompt repo.

- Fine-tune when you need style/behavior/domain expertise, often on smaller models to cut inference costs.

- Decision rule: Prompting for fixed/contained tasks; RAG for missing/dynamic knowledge; Fine-tune for behavior/style/domain.

- Cursor IDE shows RAG + prompting in action on codebases, with human-in-the-loop safeguards.

Introduction to Context Engineering

Context engineering is about helping an AI answer real questions about your work by giving it only the small, relevant pieces of information it needs at that moment. Public models like ChatGPT know general facts, but they don’t know your Notion pages, Jira tickets, or Stripe data, so they can’t tell you “my pending tasks” unless you share context. You can’t just dump everything in, the model’s context window (the amount it can read at once) is limited, and too much input makes it slow, confused, and more likely to invent answers. Instead, we pick the right snippets like the one task list, the one spec, or the one user record and place them in front of the model for that single question. Think of it like packing a small backpack for a short trip: carry only what’s needed, nothing extra. Done well, this keeps answers accurate, fast, and grounded in your data without overwhelming the AI.

RAG, part 1: building an LLM-ready knowledge base

To answer, “What initiatives are our engineers working on?” against your Notion workspace, you don’t upload the entire workspace. You:

- Collect the sources (docs, PDFs, transcripts, code).

- Chunk them into meaningful pieces (e.g., ~400–800 tokens with an overlap/sliding window so concepts carry across boundaries).

- Example: for a long paragraph, chunk words 1–500, then 401–900, etc., preserving continuity.

- For code, chunk by function or class to keep logic intact.

- Example: for a long paragraph, chunk words 1–500, then 401–900, etc., preserving continuity.

- Embed each chunk into a high-dimensional vector that captures meaning (so “engineer” ≈ “developer”; “ladyfinger” ≈ “okra”).

- Store vectors in a vector database (e.g., Pinecone, Supabase, Chroma, or AWS’s vector options) to enable fast semantic search.

RAG, part 2: from user question to grounded answer

When a user asks a question:

- Embed the query (no chunking needed unless unusually long).

- Retrieve top-N semantically similar chunks (N is tuned start small, then iterate based on answer quality).

- Assemble the prompt: brief instructions, the retrieved context snippets (optionally compressed), and the question.

- Call the LLM (GPT/Claude/Gemini/LLama) and return the grounded answer.

This keeps responses accurate, private, and cost-effective, especially for constantly changing data (Notion notes, Jira tickets, Confluence pages).

Prompt engineering principles that actually move the needle

The instructor stressed two angles: (1) for your own productivity, and (2) as a PM designing prompts inside products.

Core practices:

- Step back first: Clarify the real goal before typing.

- Assign a role: “You are a senior PM at X who interviews candidates…”

- Specify output format: “Return a table/JSON/stepwise plan.”

- Think in multi-steps: Ask the model to reason sequentially (e.g., validate user needs → define metrics → draft PRD → draft FAQs).

- Provide examples: Paste a model article/PRD and ask to mimic its structure and tone; quality jumps.

- Add constraints: For recurring flaws (e.g., em-dash overuse), say “Avoid; use commas instead.”

- Iterate relentlessly: “Good is the enemy of best.” Treat prompts as living artifacts.

Mini-example: instead of “Write an article on GenAI for PMs,” paste a well-liked article and ask the model to follow its sections, tone, and level you’ll get far better structure and polish.

What is Fine Tuning?

Fine-tuning adapts a pretrained model to your domain style/behavior, using curated examples (e.g., support chats, analyst notes). Use it when you need consistent voice, tone, or specialized reasoning that prompting + RAG can’t reliably produce.

- Full fine-tuning updates lots of parameters (costly).

- LoRA (Low-Rank Adaptation) adds small trainable adapters cheaper and faster.

- Small-model strategy: Generate high-quality synthetic training data with a powerful model, fine-tune a smaller open model (e.g., Llama/Mistral). Net result: a specialized model with 1/10th–1/100th the inference cost and faster latency which is great for production.

Choosing the right tool (decision guide)

- Use Prompting when the task is self-contained and context is short/static (e.g., summarizing a single transcript, drafting a PRD from provided notes).

- Use RAG when the blocker is missing/dynamic knowledge scattered across internal sources (Notion/Jira/Confluence/Stripe).

- Use Fine-tuning when you need brand tone, domain nuance, or behavioral changes that aren’t feasible to encode via prompts/RAG alone.

Most strong products combine them: RAG for facts, prompting for instructions, fine-tuning for style/behavior.

Case study: Cursor

Cursor demonstrates context engineering on code:

- Foundation: Cursor is a fork of VS Code which familiar editor, augmented with AI.

- Two AI modes:

- Inline autofill: as you type, it suggests multi-line completions for speed.

- Chat/agentic mode: it indexes your entire codebase (via chunking + embeddings, described as using “TurboPuffer”) and stores vectors. When you ask for a feature or refactor, it retrieves relevant code chunks, composes a context-rich prompt, and generates changes.

- Inline autofill: as you type, it suggests multi-line completions for speed.

- Max mode: gives access to very large context windows for whole-repo reasoning.

- Human-in-the-loop: Cursor proposes changes, but you review/accept; it creates commits/snapshots so you can revert by building trust without full automation.

Human-in-the-loop UX

The instructor noted many enterprise AI rollouts fail because they automate without considering trust, workflow, and UX. Successful apps (Cursor, ChatGPT, Gmail features) use co-pilot patterns: AI drafts; humans supervise, edit, and approve. As a PM, design for oversight, diffs, undo, and progressive automation.

Worked examples to anchor the ideas

- Notion question (“What are engineers working on?”): RAG pulls only items tagged with engineer names/initiatives → compress → assemble prompt → accurate, concise summary.

- Vocabulary gap (“okra” vs “ladyfinger”): embeddings place them near each other in vector space, so retrieval still works.

- Bank support bot: fine-tune on past Q&A to capture tone (“empathetic, compliant responses”) and policies; combine with RAG for latest account/product info.

- Analytics without exposing data: share only table schemas so the LLM writes queries; analysts execute them internally.

Quick glossary

- Embedding: numeric vector encoding meaning of text/code; enables semantic search.

- Vector DB: specialized store for embeddings; supports similarity search.

- Inference: running the model to produce an output (answers, code, summaries).

- Chunking: splitting long docs into overlapping pieces that preserve context.

- LoRA: light-weight fine-tuning by adding small adapter layers

Session 4: AI Agents, Vibe Coding & Career Prep

- Understanding AI Agents: autonomous decision-making systems

- Vibe Coding: building human-friendly AI experiences

- Creating your AI Product Portfolio with real projects

- Interview prep for AI Product Manager roles: skills, frameworks, and questions

- Pathways to becoming an AI Product Manager in top tech companies

Slides 👇🏽

📚 Resources:

Lovable tutorial from Lovable.

Key Takeaways:

- AI Agents are the future of automation. They are systems that combine an LLM’s intelligence, the action of tools via APIs, and autonomy to learn and make decisions.

- The value is in solving problems, not just using technology. The most successful AI implementations come from a deep understanding of user workflows and problems, not just a fascination with the tech itself.

- Building is more accessible than ever. Tools like N8N and Lovable.dev have commoditized the technology, allowing you to create complex agents and full-stack web applications with natural language and minimal code.

- Don’t be afraid to fail and iterate. The reality of working with AI tools involves hitting walls, debugging errors, and iterating. The key is to use AI to help you solve the problems you encounter along the way.

- A portfolio of used projects is your strongest asset. A side project only becomes truly valuable when it’s launched, used by others, and improved based on their feedback. The real sprint begins now.

Understanding AI Agents: Intelligence Meets Action

The instructor kicked off the finale by introducing the concept of AI Agents. He explained that while Large Language Models (LLMs) possess incredible intelligence is the ability to Understand, Transform, and Generate (UTG) they are like a brain without hands or legs. They can think, but they can’t act in the digital world.

Tools like Gmail, Slack, and Calendly are the “hands and legs,” capable of performing actions like sending emails or booking meetings. An AI Agent is what you get when you combine the two: an intelligent LLM that can use tools to perform tasks autonomously.

The core components of an agent are:

- Intelligence: The LLM serves as the decision-making brain.

- Action: The agent connects to external tools via APIs (Application Programming Interfaces) to execute tasks.

- Autonomy: The agent has the freedom to decide which tools to use and in what order to achieve a goal. It can also learn from feedback to improve over time.

To make this concrete, the instructor outlined a real-world agent workflow he could build to manage his speaking invitations:

- Trigger: A new email arrives in Gmail.

- Intelligence (LLM): The agent reads the email to determine: 1) Is this an invitation? 2) Is it from a college or a corporate entity?

- Action (Tools):

- If it’s from a college, the agent replies via the Gmail API with a standard Calendly link for free slots.

- If it’s from a corporate, it replies with a different Calendly link for paid consultations.

- If the LLM is unsure, it sends a notification to the instructor on Slack, bringing a human into the loop.

This example shows how an agent can automate a multi-step, decision-based workflow, saving significant time and effort.

MCP (Model Context Protocol): unify tools with natural language

Every API is different. That fragmentation makes agents brittle. MCP, a protocol popularized by Anthropic, gives models a consistent way to discover a tool’s capabilities and call them safely. Example the instructor highlights: Razorpay’s MCP server. With MCP set up, you can ask your model in plain English for “last 10 transactions,” “failed payment trends,” or “create a ₹10 payment link for Kushan,” and the model will call the right tool method behind the scenes.

Why it matters:

- Developer speed: include the MCP manifest; let the model translate NL → API calls.

- Safety: first-party MCP servers typically expose read/limited-write scopes (e.g., no bank-account changes).

- Reality check: MCP is promising but not yet universal; many teams still integrate direct APIs for full control.

Hands-On with AI Tools: From Theory to Practice

This section was dedicated to live walkthroughs of key tools, emphasizing that they are designed to be simple and can be learned in minutes, not hours.

Google’s NotebookLM

The instructor called this the best tool for studying and building knowledge. NotebookLM allows you to upload multiple sources (PDFs, websites, Google Docs, and even YouTube video links) to create a personalized knowledge base. He demonstrated by adding the links to all the AI Sprint video sessions. The tool then allows you to ask questions, generate FAQs, create summaries, and even produce a high-quality audio overview podcast based only on the sources you provided. This is a powerful way to revise content and distill key insights quickly.

N8N: Building Your Own AI Agent

N8N is a powerful, open-source workflow automation tool for building AI agents. The core concept is a visual canvas where you connect nodes. Each node performs a specific action: a trigger (like a form submission or a new email), a call to an LLM, or an action with a tool (like sending a Gmail).

The instructor showed how to create the email-sorting agent described earlier. More importantly, he demonstrated the real-world process of building with AI. When trying to import a workflow generated by ChatGPT, he ran into a JSON error. Instead of giving up, he showed the process of iterative debugging:

- He copied the error and fed it back to ChatGPT.

- When the error persisted, he switched to a different LLM (Gemini) with a clearer prompt.

- Gemini successfully generated the correct JSON file, which he imported into N8N to create the workflow instantly.

This was a critical lesson: don’t be afraid to hit a wall. The process is about using AI as a partner to solve the problems that arise.

Lovable.dev: From Prompt to Full-Stack App

Lovable is a “vibe coding” tool that generates a complete, full-stack web application from a natural language prompt. The instructor followed a best-practice workflow:

- Plan: He first used Gemini to generate a detailed prompt for a Pomodoro timer application, specifying features like a timer, task tracking, daily reports, and a productivity score.

- Generate: He fed this prompt into Lovable, which generated the entire front-end and back-end code for the application in minutes.

- Iterate: Using Lovable’s editor, he made specific changes with simple commands, like changing a button’s text or adding a welcome message, demonstrating the “human-in-the-loop” design.

The most powerful part was the final step: exporting the entire codebase to GitHub. This means you are not locked into Lovable’s ecosystem. You can generate a V1 of your product with a prompt, export the code, and then hand it to a developer (or open it in an AI IDE like Cursor) for more advanced customization.

Building Your AI Portfolio: The Five-Step Plan

The instructor stressed that knowledge is useless without application. The ultimate goal is to build a portfolio that showcases your skills. He provided a simple, five-step framework:

- Solve a Real Problem: Start with a problem that you or people you know actually face.

- Understand the Problem Deeply: Do the product management work. Your product thinking is more important than your technical skill.

- Build It: Use the tools you’ve learned (Lovable, N8N, etc.) to create a solution.

- Launch & Get Traction: A side project is just a prototype until people use it. Share it with your network and get feedback.

- Iterate: Evolve your product based on user feedback. This process of iteration is what truly demonstrates your capability.

He also shared “The Side Project Stack” is a list of recommended tools for every stage of the process, from ideation (Reddit, Google Trends) and design (Dribbble, Mobbin) to marketing (Product Hunt, LinkedIn) and analytics (Mixpanel).

Mindset: start, then refine

The instructor’s mantra: “You don’t have to be perfect to start; you have to start to get perfect.” Don’t get overwhelmed by big tools “eat the elephant one bite at a time.” Sign up, try a small workflow, expect to hit walls, and let AI help you climb them. Keep a human-in-the-loop, make safe tool connections (favor first-party MCPs where offered), and ship.

Example mini-plan you can copy today:

- Use NotebookLM to synthesize your AI Sprint notes into a 1-pager + audio overview.

- In n8n, recreate the email triage agent (Gmail → LLM classify → Calendly/Gmail → Slack fallback).

- In lovable, prompt a Pomodoro tracker (or a tool your team would actually use), publish it, export to GitHub, and enhance it in Cursor.

- Post your notes to earn participation, and submit your working app to the hackathon for merit.

If you do just that, you’ll have learned the tech, shipped something real, and added portfolio-grade evidence exactly what this finale set you up to do

Your Journey is Just Beginning

Congratulations on finishing the course! You’ve successfully turned complex AI concepts into a practical toolkit. You now have the framework and confidence to build the next generation of intelligent products.

Remember the most important lesson: technology will always change, but your mission as a product leader doesn’t. Stay focused on solving real human problems, AI is now simply your most powerful tool to do so.

Your learning journey truly begins now, by applying these skills. Take what you’ve learned and build something, no matter how small. You have the foundation to be a leader in this new era of technology. Now, go build amazing things.